1. 1 Definition

中文:循环神经网络(Recurrent Neural Network, RNN)是一类以序列(sequence)数据为输入,在序列的演进方向进行递归(recursion)且所有节点(循环单元)按链式连接的递归神经网络(recursive neural network).

英文:Recurrent Neural Network(RNN) is a type of Neural Network where the output from the previous step are fed as input to the current step (that's why it is called "recurrent"). The main and most important feature of RNN is Hidden state, which remembers some information about a sequence[1].

Recurrent neural networks (RNNs) are the state of the art algorithm for sequential data and are used by Apple’s Siri and Google’s voice search. Like many other deep learning algorithms, RNNs are relatively old. They were initially created in the 1980’s, but only in recent years have we seen their true potential. An increase in computational power along with the massive amounts of data that we now have to work with, and the invention of long short-term memory (LSTM) in the 1990’s, has really brought RNNs to the foreground[1].

1.1. 神经网络(Artifical Neural Network, ANN)

人工神经网络(artificial neural network,ANN),简称神经网络(neural network,NN),是一种模仿生物神经网络的结构和功能的数学模型或计算模型。神经网络由大量的人工神经元联结进行计算。大多数情况下人工神经网络能在外界信息的基础上改变内部结构,是一种自适应系统。现代神经网络是一种非线性统计性数据建模工具,常用来对输入和输出间复杂的关系进行建模,或用来探索数据的模式。

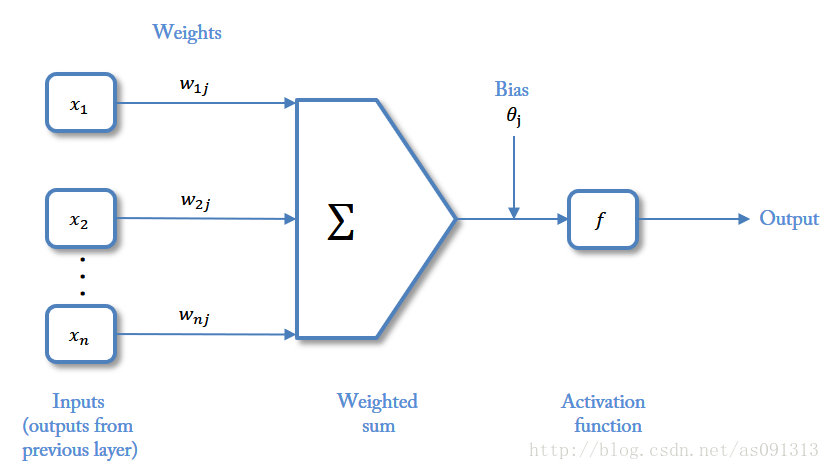

神经网络是一种运算模型,由大量的节点(或称“神经元”)和之间相互的联接构成。每个节点代表一种特定的输出函数,称为激励函数、激活函数(activation function)。每两个节点间的联接都代表一个对于通过该连接信号的加权值,称之为权重,这相当于人工神经网络的记忆。网络的输出则依网络的连接方式,权重值和激励函数的不同而不同。而网络自身通常都是对自然界某种算法或者函数的逼近,也可能是对一种逻辑策略的表达。

output = f(x) = 1 if ∑w1x1 + b>= 0; 0 if ∑w1x1 + b < 0

其中 就是神经网络: 人工神经网络可以使用下图表示:

其中,激活函数 f(x) 可以表示如下:

Types of Activation Functions (GeekForGeeks, 5 Deep Learning and Neural Network Activation Functions to Know)

- Step Function: H(x) = 1 if x>=0 else 0

- Sigmoid Function: H(x) =

- Tanh Function: H(x) =

- ReLU: H(x) = max(0, x), note: ReLU = Rectified Linear Unit

- Leaky ReLU: H(x) = ax if x < 0 else x

- SoftMax Function:

2. 2 Training RNN

The formula for calculating current state: where:

ht -> current state

ht-1 -> previous state

xt -> input state

where:

whh -> weight at recurrent neuron

wxh -> weight at input neuron

where

Yt -> output

Why -> weight at output layer

2.1.1. Training through RNN

- A single-time step of the input is provided to the network.

- Then calculate its current state using a set of current input and the previous state.

- The current becomes for the next time step.

- One can go as many time steps according to the problem and join the information from all the previous states.

- Once all the time steps are completed the final current state is used to calculate the output.

- The output is then compared to the actual output i.e the target output and the error is generated.

- The error is then back-propagated to the network to update the weights and hence the network (RNN) is trained.

2.1.2. Advantages of Recurrent Neural Network

- An RNN remembers each and every piece of information through time. It is useful in time series prediction only because of the feature to remember previous inputs as well, which is so called Long Short-Term Memory.

- Recurrent neural networks are even used with convolutional layers to extend the effective pixel neighborhood.

Disadvantages of Recurrent Neural Network

- Gradient vanishing and exploding problems.

- Training an RNN is a very difficult task.

- It cannot process very long sequences if using tanh or ReLu as an activation function.

Applications of Recurrent Neural Network

- Language Modelling and Generating Text

- Speech Recognition

- Machine Translation

- Image Recognition, Face detection

- Time series Forecasting

3. LTSM

LSTM由Hochreiter和Schmidhuber(1997)在Report: FKI-207-95中提出[5],并在近期被AlexGraves进行了改良和推广。在很多问题,LSTM都取得相当巨大的成功,并得到了广泛的使用。LSTM通过刻意的设计来避免长期依赖问题。记住长期的信息在实践中是LSTM的默认行为,而非需要付出很大代价才能获得的能力!

- 实战示例: 通过Transformer实现文本分类 https://aistudio.baidu.com/aistudio/projectdetail/1247954 基于PaddlePaddle的整个实现过程。使用IMDB数据集是一个对电影评论标注为正向评论与负向评论的数据集,共有25000条文本数据作为训练集,25000条文本数据作为测试集。 该数据集的官方地址为: http://ai.stanford.edu/~amaas/data/sentiment/

4. 参考文献

[1] Introduction to Recurrent Neural Network

[2] A Guide to Recurrent Neural Networks: Understanding RNN and LSTM Networks In this guide to Recurrent Neural Networks, we explore RNNs, long short-term memory (LSTM) and backpropagation.

[5] Report: FKI-207-95(1995): Long Short Term Memory, https://people.idsia.ch/~juergen//FKI-207-95ocr.pdf

[6] ReLU (Rectified Linear Unit) Activation Function, https://builtin.com/machine-learning/relu-activation-function, https://iq.opengenus.org/relu-activation/

4.1. RNN

- SEQUENCE MODELS AND LONG SHORT-TERM MEMORY NETWORKS https://pytorch.org/tutorials/beginner/nlp/sequence_models_tutorial.html PyTorch官方教程,代码测试可行,更多示例:https://pytorch.org/tutorials/index.html

4.2. PyTorch

- 一文读懂 Pytorch 中的 Tensor View 机制 https://zhuanlan.zhihu.com/p/464384583 2022-02-06 内容比较丰富也比较长。

- 知乎机器学习社区 https://www.zhihu.com/column/c_1320399205467795456

- 如何从RNN起步,一步一步通俗理解LSTM 2023-04-21 本文在ChristopherOlah的博文及@Not_GOD 翻译的译文等文末参考文献的基础上做了大量便于理解的说明/注解(这些说明/注解是在其他文章里不轻易看到的),一切为更好懂。

4.3. LSTM

- 官网:PyTorch 深度学习:60分钟快速入门 https://pytorch.apachecn.org/1.7/02/

- pytorch官网:https://pytorch.org/

- 安装:·

pip3 install torch torchvision torchaudio - 随机抽样知乎教程 https://www.zhihu.com/topic/19830575/top-answers

- LSTM implementation in pure Python 2019/05/05 Designed a LSTM recurrent network in pure Python.

- Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras https://machinelearningmastery.com/time-series-prediction-lstm-recurrent-neural-networks-python-keras/ 文章非常长,但是内容值得参考。

- LSTM Model Architecture for Rare Event Time Series Forecasting 2019/08/05 In this post, we will review the 2017 paper titled “Time-series Extreme Event Forecasting with Neural Networks at Uber” by Nikolay Laptev, et al. presented at the Time Series Workshop, ICML 2017.

4.4. Transformer

- 图解Transformer(完整版) https://blog.csdn.net/longxinchen_ml/article/details/86533005 前一段时间谷歌推出的BERT模型在11项NLP任务中夺得SOTA结果,引爆了整个NLP界。而BERT取得成功的一个关键因素是Transformer的强大作用。谷歌的Transformer模型最早是用于机器翻译任务,当时达到了SOTA效果。Transformer改进了RNN最被人诟病的训练慢的缺点,利用self-attention机制实现快速并行。并且Transformer可以增加到非常深的深度,充分发掘DNN模型的特性,提升模型准确率。在本文中,我们将研究Transformer模型,把它掰开揉碎,理解它的工作原理。

- 一文带你了解 Transformer 庞大家族系谱! 2023-04-16 非常详细的Survey

- Attention Is All You Need, Google, https://arxiv.org/abs/1706.03762, 12 Jun 2017

- Transformer 模型详解 2023-02-23 主要介绍 Transformer 模型的具体实现,参考 The Illustrated Transformer

4.5. 统计学

- 统计学:基本概念 https://zhuanlan.zhihu.com/p/510727238

- 统计学:总体与样本的基本定义&常用统计量概述 https://zhuanlan.zhihu.com/p/358590762?ivk_sa=1024320u

- 统计学:抽样调查第01讲(绪论:调查与抽样调查;基本概念;常见抽样方式) https://zhuanlan.zhihu.com/p/354135768

4.6. 论文

- Recurrent Neural Networks and Long Short-Term Memory Networks: Tutorial and Survey 15P

- Mathematics of Machine Learning: An introduction 15P

- Tensor Decompositions for Learning Latent Variable Models 60P

4.7. 通用

- 有了这个机器学习画图神器,论文、博客都可以事半功倍了! https://zhuanlan.zhihu.com/p/413694810

数据分析中常用的五个统计学基本概念:特征统计、概率分布、降维、过采样和欠采样、贝叶斯统计 (不少图都不错)

Back-propagation, an introduction 2016/12/20 http://www.offconvex.org/2016/12/20/backprop/

- The Best Tools for Machine Learning Model Visualization 2023/04/19 不错的几款ML工具。

- 吴恩达:28张图全解深度学习知识 2022-01-26 从深度学习基础(01-13)、卷积网络(14-22)和循环网络(23-28)三方面介绍

5. Others

flowchart LR

X((X)) --->| W | Y((Y))

flowchart LR

X((h0)) ---> Y[h1]